- Soroco Tops Everest Group’s PEAK Matrix® Assessment - Four Years in a Row

Scout

Customer Stories

Ecosystem

Work Graph

Modernizing Object Storage for Cloud Native Deployments

- 3 August 2021

- 15 minute read

Why should you care?

Data storage is a universal need. Structured data goes into familiar stores like an RDBMS (PostgreSQL, MySQL, Oracle), but unstructured data can be housed in many ways. For example, object storage systems, key-value caches, document stores (if there’s some structure), and even flat files on a file system.

This article details how and why your choice of unstructured storage:

- affects your scalability by making or breaking your cloud native capability,

- balloons your software maintenance cost, and

- limits the possible savings you could get on your cloud storage expenses.

We take you on our journey from a home grown, flat-file-based object storage layer, to an off-the-shelf approach with MinIO which saved us 30% in storage costs and 90% in maintenance costs.

Object Storage at Scale

Soroco’s product Scout collects millions of data points every day from interactions between teams and business applications, during the natural course of a workday. From the collected interactions, Scout detects patterns in the data using various machine learning algorithms to help our customers find opportunities for operational improvement.

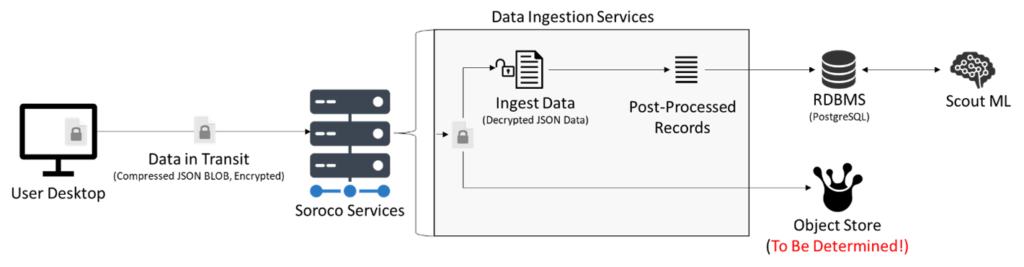

Below, we show the flow of this information via an example using Scout events. Scout events are represented as JSON objects that are buffered in memory and then periodically stored in compressed, encrypted JSON files on disk. Compression minimizes the network bandwidth and storage requirements. Encryption protects sensitive data at rest and in flight. Buffering saves compute resources by batch processing events. These services can be in the cloud, or on premises if the customer prefers it. Scout’s data ingestion services then decrypt and decompress the JSON data after which the individual records are post-processed and stored in an RDBMS. The records can then be fetched by our various machine learning algorithms.

A sketch of information flow in Scout

We must store the original data though, because post-processing might transform or accidentally drop data that we find useful in the future. For example, an updated machine learning algorithm might want a re-interpretation of the features from the original samples. If we threw them away after post-processing, we could never go back to the original data to improve results. Of course, we also have to store screenshots somewhere, and our RDBMS did not seem like a good choice. A contributor to PostgreSQL benchmarked the performance of object storage in PostgreSQL as compared to disk and found a 10x slowdown in a read-based benchmark. You don’t want to store objects in PostgreSQL!

A typical large scale deployment spanning 100s of teams and 1000s of users ingests approximately 2B objects equating to approximately over 130 TB per year (assuming 261 working days). The post-processed structured information stored in our RDBMS is orders of magnitude smaller because it is just the output of a feature engineering pipeline for machine learning algorithms.

In addition to the storage needs, the total set of requirements we had come up with when looking for an object storage solution were:

- Handle our storage requirements of objects at scale

- Decouple storage from our local file system for reducing cost and maintenance

- Provide compression to reduce storage requirements

- Minimal maintenance requirements from our engineering team

- Support for detailed access control lists to protect the original data files

- Simple integration with cloud native storage services such as Amazon S3 and Azure Blob Storage

- Local storage if cloud native storage services are not available (e.g., for on-premises)

A solution meeting all these requirements ought to be both – cloud-native and scalable. This would let our product handle substantial retention periods (1 year or more), on-demand random access read workloads, and all of the deployment scenarios we care about (bare metal, private cloud, public cloud). In the remainder of this blog article, we present the different approaches and trade-offs which lead to our final solution which saved us 30% in storage costs and 90% in maintenance costs.

Considering our Options for Object Storage

There are a few common options for object storage that we considered while evaluating different designs to meet our requirements.

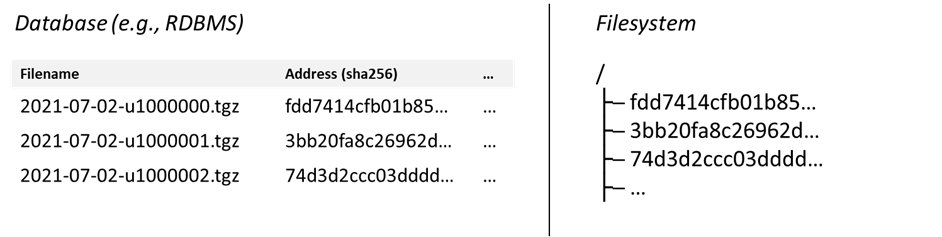

Filesystem-based Object Storage with References

A low-complexity solution to object storage is to store objects on the disk and keep references to the available objects with any important metadata in a database or index. Git is well known for doing this and implementing a style of it called content-addressable storage (CAS). An example of this is illustrated below.

As illustrated with a CAS system, objects are stored on the filesystem by their hash and any meta-data associated with them can be stored in a database or catalog.

Benefits of file-based object storage are simplicity in design, and if CAS is used you will get de-duplication of objects for more efficient storage since multiple references can map to the same object on disk. No specialized systems are required to track the objects, and access to them will be as easy as filesystem reads.

Downsides of the filesystem-based object storage are maintenance, lack of access control without building or using a more substantial system around it, and inaccessibility to a shared filesystem in modern cloud native deployments where services do not assume local storage. Though you could mount a network share, the performance impact of using an NFS share would likely be substantial. For these reasons, we believe that while this approach is fast and has simplicity, it does not meet a lot of our requirements.

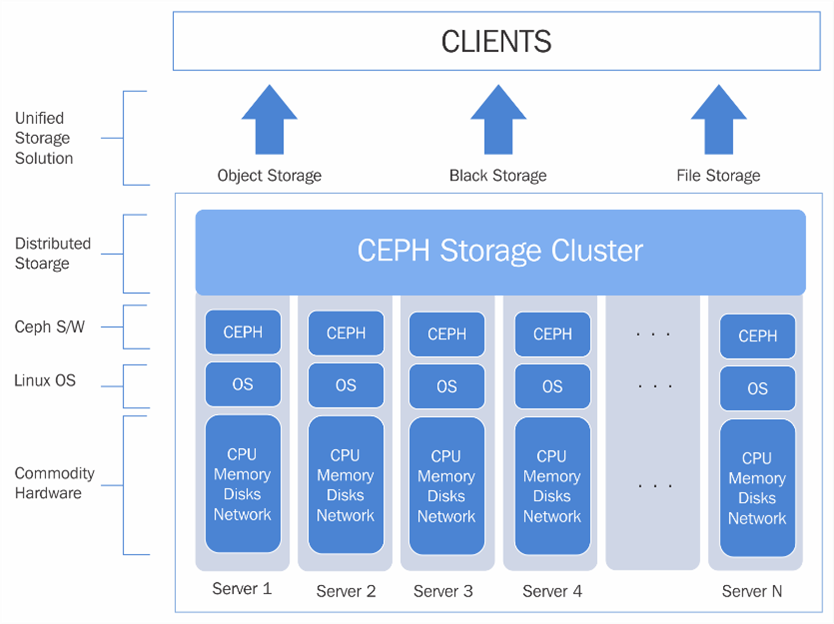

Distributed Object Storage

To keep the benefits of filesystem-based object storage and overcome the limitations around access to the storage, distributed object storage systems such as Ceph and Swift were built. Their design is illustrated below, where a “storage cluster” is built by distributing objects across any number of block devices (e.g., bare metal disks). This storage is then made accessible through microservices with network accessible APIs to store and retrieve blocks, and fine-grained access control.

An example distributed object storage deployment with Ceph

(Credit: https://insujang.github.io/2020-08-30/introduction-to-ceph/)

Benefits of distributed object storage systems such as Ceph and Swift are their ability to scale in storage and bandwidth by adding more disks to the cluster. They have significant controls exposed around the distribution of objects across block devices to achieve redundancy if desired (e.g., through erasure coding), cloning, snapshotting, and thin provisioning for efficiency.

Downsides of distributed object storage systems are their complexity. Their design is focused on multiple block devices with multiple binaries and services to run and maintain the storage system. This would add a lot of complexity for our product Scout which operates in many single storage block devices scenarios on-premises. This would further require significantly more knowledge for the users of our product to operate the storage system and any issues with it.

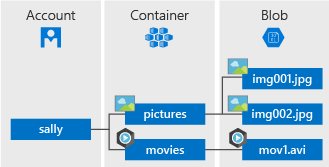

Cloud-based Object Storage

Benefits of the cloud-based object stores are the simplicity they provide in managing everything for you, where your systems will only need to access them via APIs to store and retrieve objects. You do not need to worry about maintaining any services to keep the object stores running, and you get the benefits of the cloud to keep accessing more storage capacity as you need it, fine-grained access controls per container, and the ability to deploy in multiple regions to minimize latency. Cost of the object stores is often more favorable than increasing the size of your primary partitions to store information (e.g., in a filesystem-based object storage approach), since primary partitions are often faster storage options (higher IOPS) at higher costs.

There are downsides to the cloud-based objects stores. Your product or solution must have network connectivity to the cloud. Our product Scout, as an example, is deployed in many on-premises scenarios behind firewalls and within private networks with no connectivity guaranteed. Therefore, we could not base our entire solution on cloud-based storage without guarantees we would have connectivity to the services.

Bringing the Options Together with Hybrid-Cloud Object Storage and MinIO

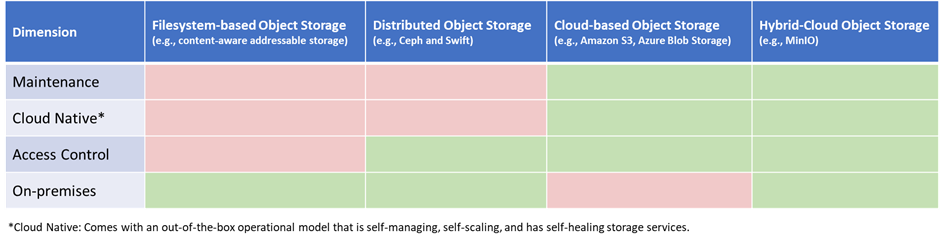

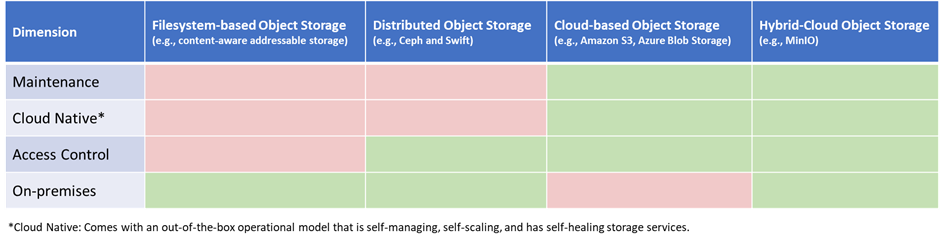

We have presented three system designs for object storage. Filesystem-based, distributed, and cloud-based object storage. Each approach comes with varying degrees of maintenance, scalability, detailed access control, and ease of on-premises operation. We have summarized this below and will introduce hybrid-cloud object storage in comparison to the previously discussed designs.

Hybrid-cloud object storage provides a unique combination of capabilities to give cloud native object storage (e.g., in S3) when desired, with the flexibility of operating on-premises. Though there may be other options available that we are not aware of (please let us know!), we have found MinIO to be leading hybrid-cloud object storage functionality. As we will show through MinIO, switching between cloud-based object storage and an on-premises operational model only takes a configuration change. This means that you use hybrid-cloud object storage technology like MinIO in your product/technology to build how you store objects in one way but be flexible to many operational scenarios.

MinIO uses a single binary for operations and a single service for each server in distributed mode. A single binary instead of multiple services that will require setup and maintenance is a significant advantage to using the particular hybrid-cloud object storage approach with MinIO, as opposed to Ceph and Swift that are more complex to setup and maintain. At Soroco, this was a predominant reason for why we chose MinIO and deprecated some of our own internal services related to storage to reduce our maintenance costs related to object storage by 90%.

Another major benefit of the hybrid-cloud object storage approach for Soroco is Cloud Native with MinIO via a Kubernetes Operator which is supported directly by the core MinIO team. This means that it has built-in support to self-manage, self-scale (e.g., obtain more storage as needed), and to self-heal any services that fail. What cloud native support means with MinIO is:

- High availability of its runtime (self-healing of its service)

- Zero downtime upgrades

- Backups and restores

- Resource scaling (disk, compute, network, memory…)

- Permissions and security controls

Below we will show how this flexibility and scalability of MinIO. First, we will show a simple example of it running in a single node with local storage. Then, reconfiguring it to operate through an S3 bucket. To help our readers we will link to an example of running it in distributed mode. Finally, we will provide guidance on how to simply migrate to MinIO based on our experience.

Bringing the Options Together with Hybrid-Cloud Object Storage and MinIO

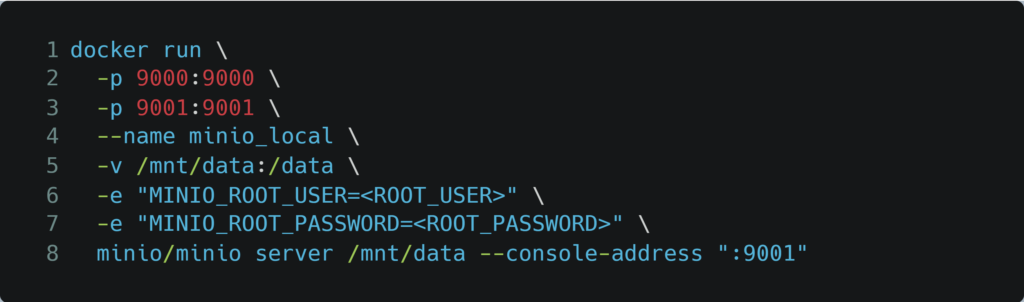

Another option to get the single server up and running configured with local storage is to pull and start the MinIO maintained Docker container:

A quick way to check the accessibility of the object storage is to navigate to the MinIO object browser which we started http://127.0.0.1:9001/. The login credentials are the root user credentials set. If run with no root user configuration, the default credentials to be used are minioadmin:minioadmin. This will navigate you to the main console where you can monitor the health of the object storage and optionally use the UI to setup users with access control.

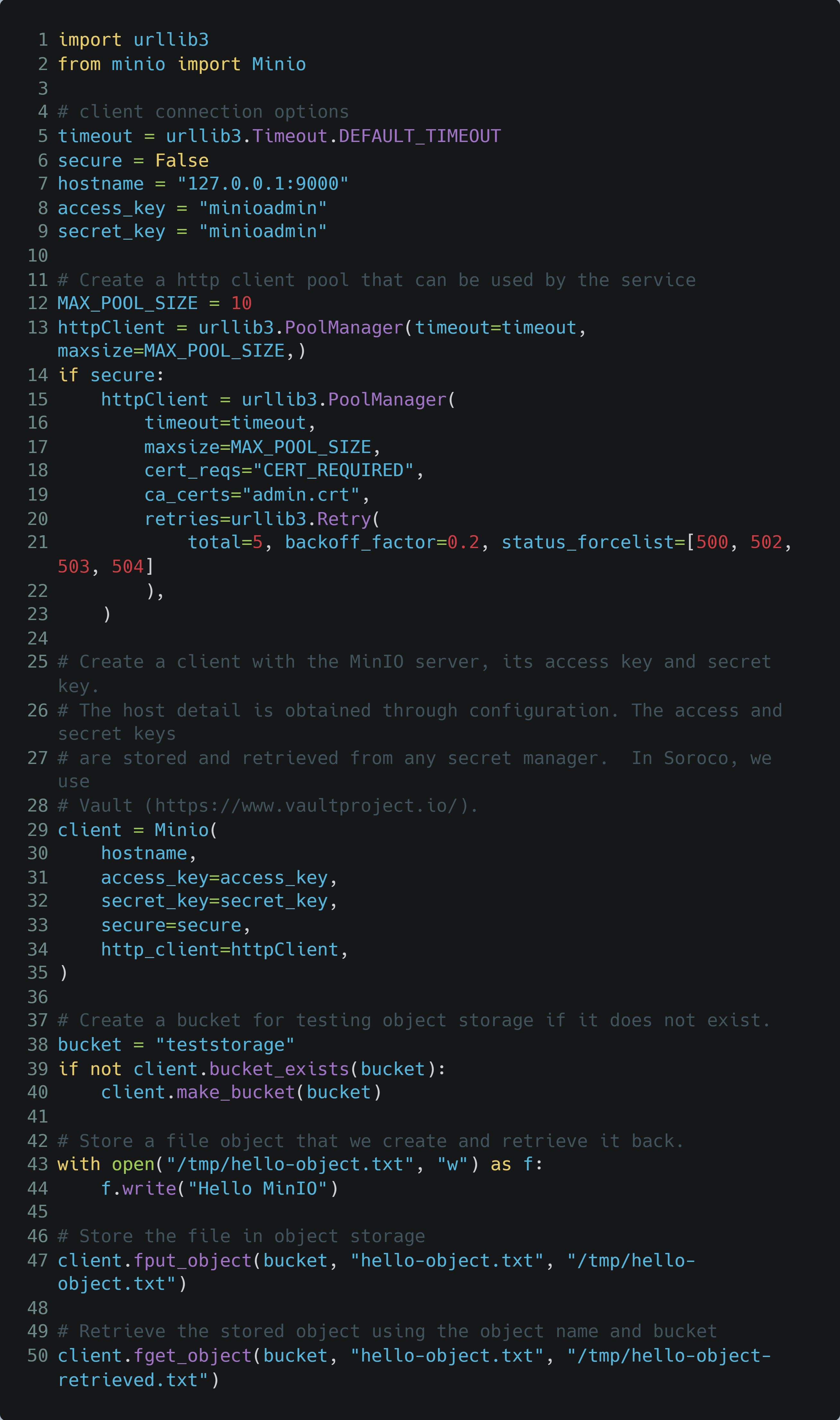

Next, let’s use the Python MinIO client library to connect to the storage, store an object that we will create, and retrieve it back to test the end-to-end storage process. First, install the Python MinIO client with pip:

Create a Python file for the following example which you will be able to run with your MinIO server running locally:

Through the above example, you should have been able to get a local object store running with MinIO with its single binary. To access that store anywhere on the network, the network port (9000 in our example) just needs to be accessible.

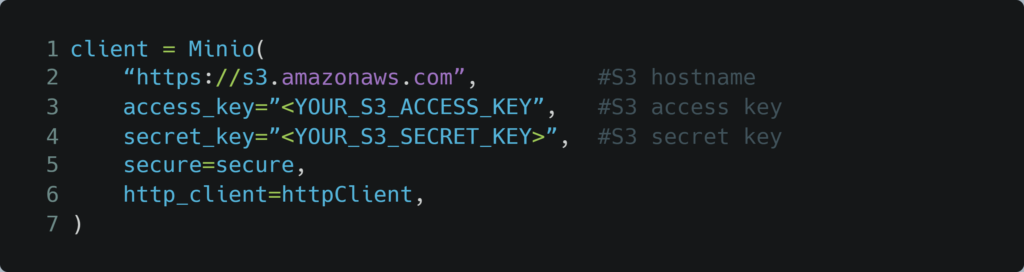

Setting the Hybrid-Cloud Object Storage to Use a Cloud-based S3 Container

Configuring the hybrid-cloud solution with MinIO to use a cloud-based container is simple. Since Minio is S3 compatible, all that is needed is to set the host and the access credentials to S3 as shown below.

Once configured, the usual set of client commands can be used as demonstrated in the example from the previous section to create buckets, put files, and get files from the object store.

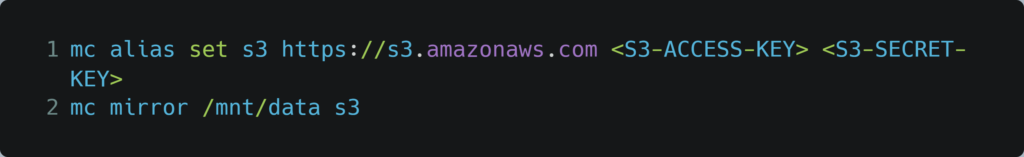

Migrating to MinIO and then to Cloud-based Storage

Concluding on Modernizing our Object Storage with Hybrid-Cloud Solutions

In this blog post, we presented multiple ways to achieve object storage with their trade-offs, and how hybrid-cloud object storage can operate on-premises when suitable and cloud-natively for properties of self-scale, self-healing, and self-managing when desired.

At Soroco, we have found this hybrid-cloud object storage approach, particularly through MinIO, to give us tremendous flexibility in where we deploy our technology. It gives us scale and simplicity to minimize our operational overhead of maintaining our object store. With simple to use tooling and libraries provided by MinIO, we can easily integrate the access to the store in our code, flexibly change the backend, and even migrate data across different operational models (e.g., can migrate to on-premises or to the cloud as needed).

We would love to hear more about your experiences with object storage, any of the technologies that we have mentioned, and especially others that we may have missed and should consider in our (or other’s) journey.

Content Explorer

See Scout in action.

Schedule your demo now!