- Soroco Tops Everest Group’s PEAK Matrix® Assessment - Four Years in a Row

Scout

Customer Stories

Ecosystem

Work Graph

Building Large Scale Systems and Products with Python

George Nychis

- 15 April 2021

- 20 minute read

Overview

At the beginning of Soroco’s journey, we had to answer a question that many engineering organizations have had to answer before.

What programming language were we going to use when building and scaling our products?

The reason that each organization needs to answer the question on their own is that every product’s goals, needs, and constraints are different. However, even with our own goals in mind (which we will explain), no language we could pick would be perfect. We would want to make a decision knowing each language’s potential and shortcomings. We would plan to overcome the key shortcomings to make our technology.

Here are the kinds of typical scenarios that we have encountered and the challenges we face when automating or discovering transactions in a live enterprise environment:

- The automated or discovered work needs to closely match what teams were already doing on the ground. That is, use the same applications, the same data, and most-often follow the same steps. Therefore, a transaction in this context is determined by the steps taken by teams which manually execute the work. And this means that the right comparator set for scale and performance is the manual work that teams execute today to get the work done. Consequently, this almost always means dealing with highly legacy (including mainframes!) and varying enterprise applications, up to 80% of which typically do not have any API interface.

- Each transaction typically involves accessing approximately 7500 data fields in 71 screens, executing 216 steps, and context switching between 15-890 times between enterprise applications, and takes anywhere between 5-20 minutes to execute a single transaction.

- Data being pulled from multiple heterogenous enterprise applications – on average each instance involves gathering data from 5-20+ applications of which 40% tend to be legacy.

- Reading a diverse set of complex documents (e.g. invoices, legal documents, etc.) that requires complex NLP processing to extract structure from documents as well as compare, in near real-time, the semantic similarity of multiple documents. On average each process involves reading 15 different documents.

- Each automated transaction needs to have the same fidelity as humans, if not better, in terms of error rates, throughput, and reliability while being more scalable.

- Extremely high diversity in the set of processes, their steps, and the industries that they are executed in. For example, in this post alone, the data is based on 7 different industries and nearly 20 different functions.

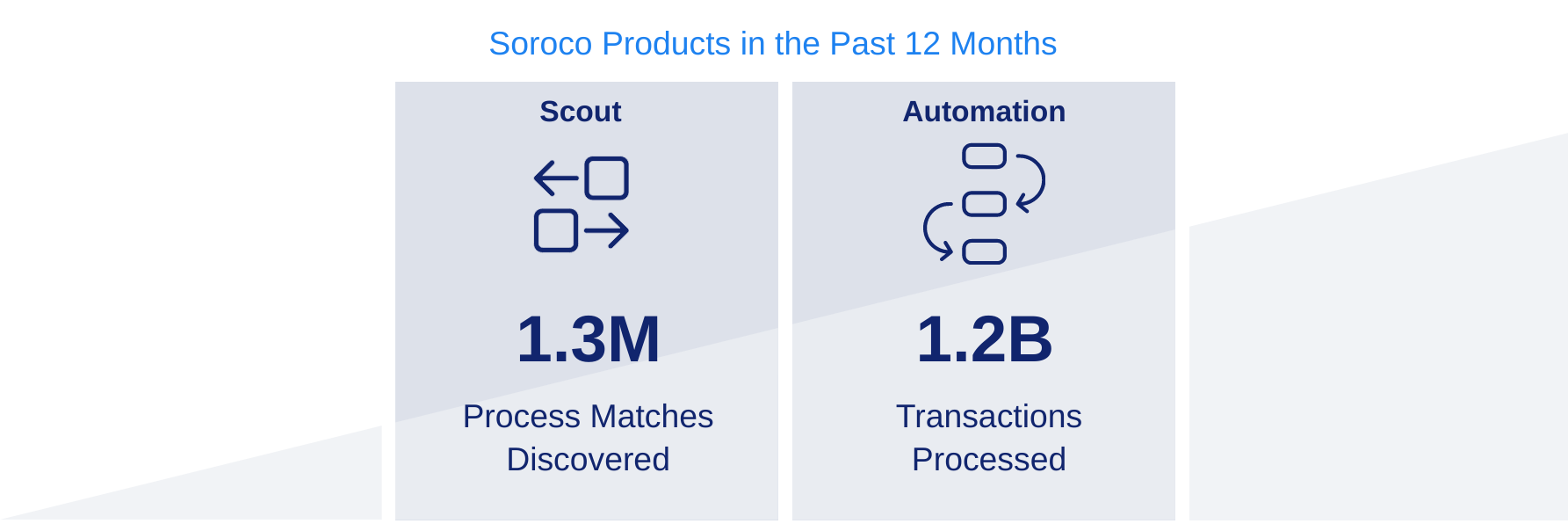

Hence, nearly 7 years ago when we sought out to finalize our decision on a programming language, we were designing and developing our automation and process discovery products. Our automation product was to be capable of handling billions of transactions a year for a single business process. Our process discovery product would need to be able to process billions of data points to discover millions of processes. Both would be distributed systems and deployed globally. The challenges in automating or discovering processes is that these are all running a live enterprise and feature the following issues:

- In 2020, Soroco achieved the scale we planned for when making these decisions. Within the past 12 months, Soroco’s Scout product has discovered over 1.3 million process transactions covering up to about 12 million hours of manual work.

- In 2020, Soroco’s automation systems have processed over 1.2 billion enterprise transactions across multiple clients to bring our customers savings and scale to the extent of over 2M hours.

Note, however, most of these automated systems ran in sync with people’s working timings and on working days. This is because typically the automation execution is triggered by an incoming email, document, or an event that populates data in an enterprise system. Furthermore, our ability to ‘scale’ more transactions per second is significantly rate-limited by the delays and slowness of legacy enterprise apps that are not built for an automated layer of software running on top. Therefore, our point is not about merely optimizing for number of transactions per second. There are many systems where Python has been optimized for this metric alone. We cannot control for this metric in an enterprise automation setting built on top of legacy systems. Rather, our point is about ensuring high-fidelity and scalable execution of automation systems in the enterprise while also meeting enterprise standards of safety and reliability.

Therefore, we needed to be able to architect and design our technology carefully. Though we think of picking a programming language to meet this kind of scale as a technical decision, it is important to keep in mind that scaling technology also means being able to scale the engineering team who builds it. The easier the product is to develop, and its code is to read, deploy, secure and maintain…then the better the technology’s development could scale.

In this blog post, we will describe why Soroco chose Python and what we did to ensure we could develop reliably, at scale, and securely. Many of these properties were not ‘out of the box’ with Python 7 years ago. This was at a time when it was far from the most popular language, still considered ‘slow’ and a ‘scripting language.’ Python was far from being considered a language for building large scale systems. All of that has changed today, and in this blog post we will provide guidance in all of the following dimensions which helped us build products with Python.

- Predicted Growth of Python: Why we picked Python to make it easier to scale our engineering team, despite many of its limitations. The global education system provided hints that Python would be one of the most widely used and known languages in a few years from when we started.

- PEP484 and Enforcing Typing: How we overcame the downsides of being non-statically typed (e.g., more potential errors in runtime) by supporting the growth of Python’s PEP484 for ‘gradual typing’ while it was still in development. Developing an early PyCharm plugin that enforced it (before mypy was complete), and even interacted with Guido Van Rossum on this journey!

- Linting and Styling: Unlike languages like Golang which now ship with linters and styling built in, Python does not. We have continually and carefully picked linters and styling libraries to ensure our development looks similar.

- Automated Pipelines, Testing, and Security Checks: Having build pipelines from the beginning allow us to enforce our linters and to run various security checks. These checks have helped us identify various potential runtime and security issues before release.

- Packaging, Dependencies, and Hosting: To build at scale, it has always been important that we enforce proper packages, model dependencies, and importantly host the packages so that our build systems can easily pull them.

- Choices of Libraries for Scalable Processing: How we carefully chose libraries. systems, and packages for processing at scale. In particular, the use of Pandas, numpy, and a few key others have played critical roles.

In each section we will share what libraries we have chosen that have helped us scale to where we are today. Soroco’s products handle billions of transactions for single business processes (built on Python), and our process discovery processes handles billions of data points to find patterns in the workplace.

The Growth of Python and Scaling an Engineering Team

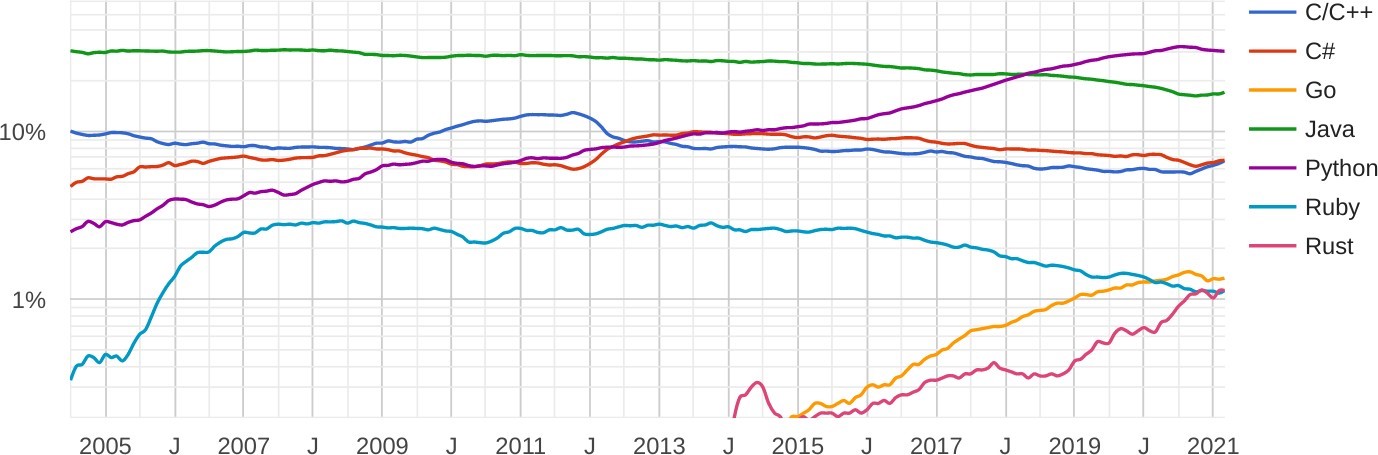

When picking from a set of programming languages that would fit our product needs in building distributed systems, we considered C++, C#, Java, Python and were aware of Golang and Rust as up and coming languages. There were many trade-offs at that time in 2014. C++, C#, and Java were well-established object-oriented languages that were all statically typed and had significant tooling built around them like well-established IDEs (e.g., Visual Code and Eclipse). Python was growing for its simplicity, and was well known as being duck typed, often functional in design, and lacked a lot of tooling like a predominant IDE.

We loved the simplicity of Python and the growing ecosystem around it but feared at scale its duck typing might lead to more errors missed in development and longer development time from confusion over parameter types, variable types, and return types. We were also concerned about the source code (or compiled form) sitting open on our production systems, and lack of consistency in our development from lacking a predominant (and powerful) IDE with the language.

Despite these things, we believed that Python was going to be a major language in the next 5 years and that the community was focused on overcoming a number of these shortcomings for developing systems at scale. Looking at the diagram below which shows the popularity of languages by searches for tutorials on Google, in 2014 and 2015 it was not yet clear Python would pass the giants in systems building: Java, C/C++, and C#. In fact, in 2015 Java was still dominating and Python, C/C++, and C# were all similar in popularity. Today, Python has passed all of these languages in popularity.

There were a few indicators for us that Python might take this kind of lead in popularity many years later. First, was rapid developments and improvements in Python 3 vs. the legacy but widely used Python 2. Whereas, we felt other leaders were stagnating in quality-of-life features to make development simple, Python was more simple development with significant improvements in exception handling, asynchronous support, and optional typing through PEP484, which we will later discuss.

When thinking about the engineering team we would recruit, we also looked to the educational system to see what was being taught. In 2014-2015 we found that many major Computer Science programs were now teaching Python as the first programming language. It was an indicator that when these millions of CS students graduated a few years later, they would be another influence in the industry by building tools and systems in Python. After all, it was the language they would know best.

To a lesser degree, but still important, web programming was also growing in its use of Python with the popularity of the Django and Flask. This was also a signal that web applications may also be built heavily in Python in the future, which has in fact grown. For Soroco, that meant we could use both Python for our distributed systems and web applications alike (though, C# and ASP.NET were also attractive for these same reasons).

When considering everything mentioned, we believed Python would be the major language in a few years and therefore chose it as our predominant language. Though it took major efforts to deal with Python’s shortcomings early on. Most engineers we go to recruit now desire programming in Python, simplifying our recruiting and scaling our engineering team.

Python + Typing for Scaling Development (PEP484)

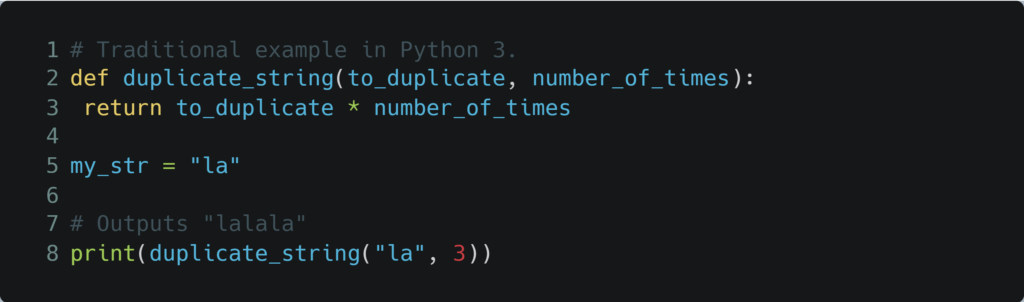

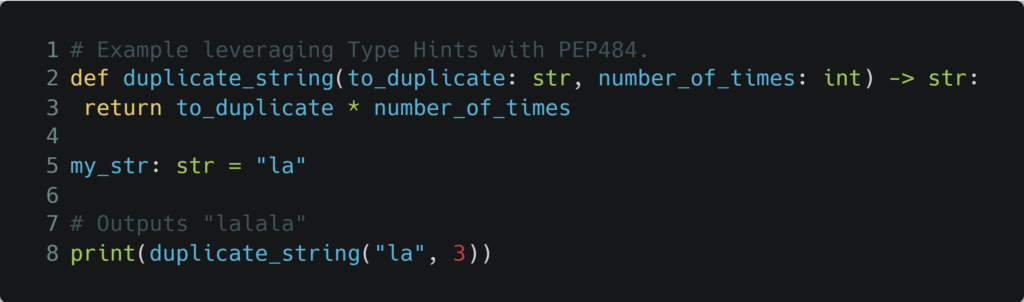

Before we get to how to enforce types with Python, we will first look at what exactly PEP484 provides in the language. Without PEP484, you might write code with the following style:

Considering the traditional example above, it may not be hard after sitting down for a few minutes to figure out the expected parameter types and variable types. However, with thousands of lines of code split across multiple files and packages we believed having to infer types would hinder development and lead to mistakes.

With PEP484, types were now optional and that same block of code could be written as follows, which is far more explicit for a developer to understand the types of the variables for usage purposes and avoiding mistakes:

We found this to be a significant improvement while maintaining the simplicity of the Python language.

However, at Soroco we did not want type “hints”, nor did we want it to be optional. We wanted to enforce it. Else, we risked part of our code being difficult to use. The best way to ensure it would be to enforce it.

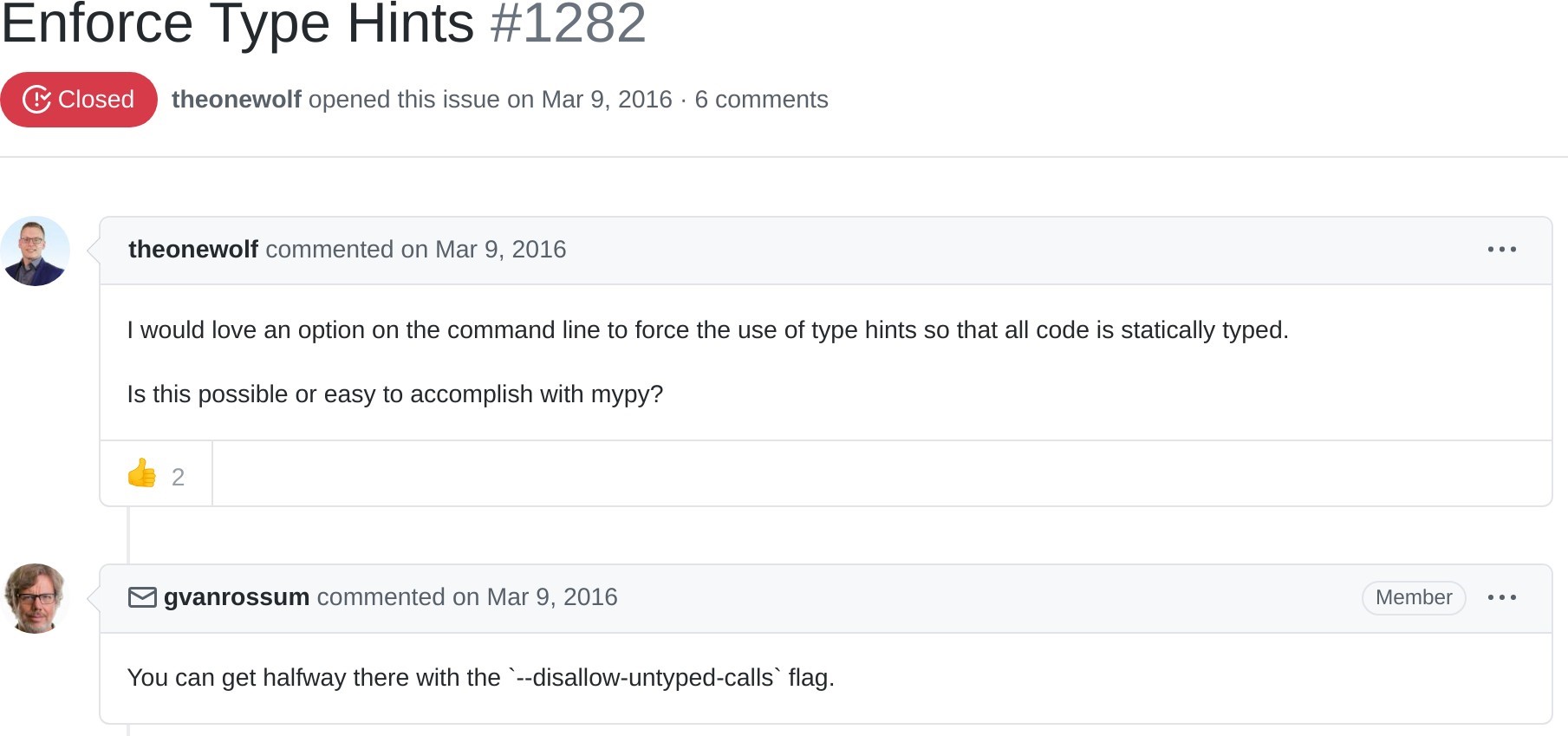

Enforcing typing was not so easy at the time. PyCharm, the major Python IDE at the time did not have such functionality. In early 2015 and 2016 we therefore built a plugin with PyCharm that enforced typing. It would throw errors when our engineers would not specify a type in local variables, function parameters, return values, or class variables. We knew we could not keep up the maintenance of the plugin over time, but it was a great stop gap. Next, we turned to the general Python community for a solution.

Neither Wolf or I ever expected that Guido van Rossum himself would comment on the issue giving us direction. After sharing a little bit of excitement in the small comment, it further reinforced our direction that the founder of Python was paying close enough attention to the importance of typing it.

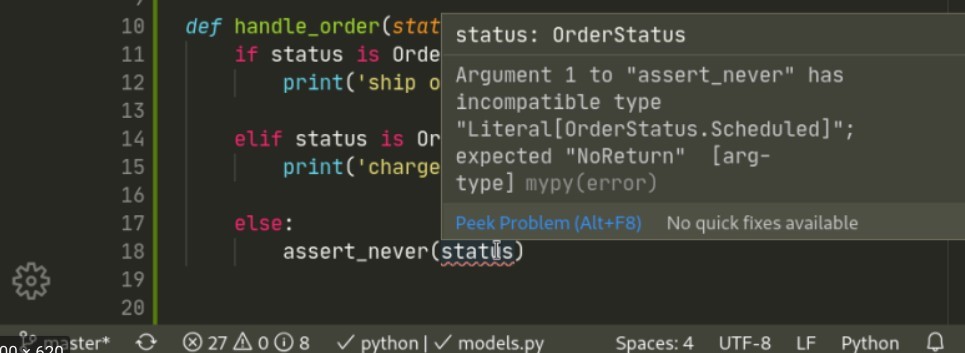

With PEP484 and mypy we had a clear path forward for Python with typing enforced. This has been critical for scaling our development where our modules and how to use them are easy to develop and use. Today, mypy has great support directly in VS Code for enforcing and throwing errors when types are not specified during development. As we will later discuss as well, mypy is part of our standard build pipelines to further enforce (and double check) all of our code is typed when committed.

Finally, we had the benefits of statically typed languages like C/C#/C++/Java while maintaining what we believe is the simplicity of Python and its libraries. Later to be directly supported in modern IDEs for Python (like PyCharm and VS Code).

Linting and Styling with Python to Normalize Development

Scaling our development of Python also meant standardizing linting and styling. The options available for this grew significantly between 2015 and 2021 with the growth of the language. We share the choices that we have made in styling and linters to ensure we develop similarly and follow common standards

For formatting, we use black and for sorting imports we use isort. In particular, we find black to be a very strong project for enforcing PEP8, the standard style format for Python. Though we spent time trying to decide what parameters we should use as defaults, we ultimately concluded on using black’s default parameters in full. Though seemingly simple, we also believe in standardizing the sorting of imports which is where isort is important.

Linting also plays a critical role in standardizing and scaling our development. We have used bandit as a mandatory linter since beginning our use of Python. The mypy project was built by the OpenStack community to find very common security issues in Python code. We use flake8 to maintain good syntax, formatting, and styling in our code. Inside of flake8 is pycodestyle as well. A much debated setting in flake8 for us was the 88 character line length. We have gone back and forth between 88 and 120 characters. As already discussed in depth, mypy is a critical linter for Soroco, since Soroco enforces the use of PEP484 in its code to get the benefits of static typing in Python.

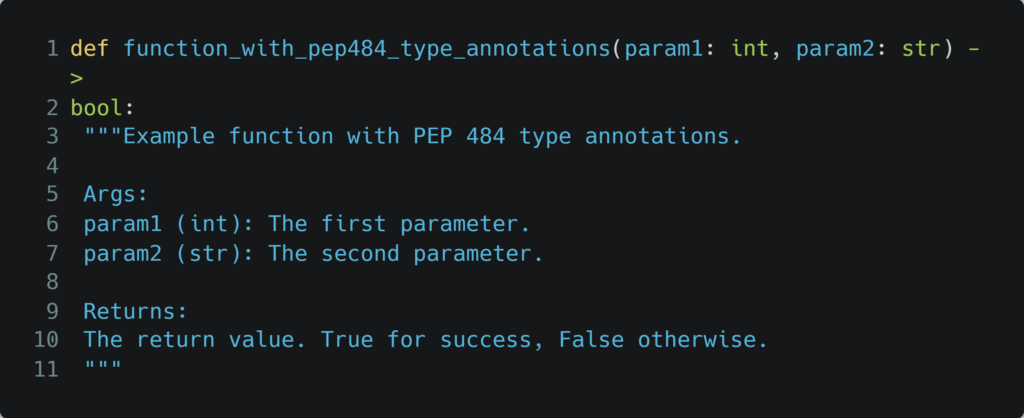

For documenting functions, classes, and other objects we have found Google-style docstrings to be continually updated to support more modern updates in the language. An example of this styling from the Napolean project, with PEP484 annotations is as follows:

Though periodically discussed, we have not enforced an IDE. Our development team predominantly uses PyCharm, VS Code, and a handful of powerful text editors (Vim, EMACS, etc). Overall, as long as the code passes through our linting and automated pipeline checks then we ultimately do not mind what code editor our developers use. What comes out of the development lifecycle is more important to us than micromanaging the development down to a particular IDE.

Automated Pipelines, Testing, and Security

With linting, styling, and security checks in place, it is important to build a pipeline that enforces running all of them. This will always catch errors and issues when a developer’s local build environment is different and may have forgotten to make a remote change.

With these general development checks, the package’s test cases should also be run. Though there are many options for testing, pytest continues to be one of the most full-featured testing frameworks for Python and for these reasons we use it. It is important to put these testing steps directly into the pipelines to build up a proper CI/CD pipeline.

There are many options for building automated pipelines. Early in Soroco, most of our CI runners were run on-premises with Gitlab runners. More recently, Soroco has moved to Azure DevOps with a mix of runners on-premises and in the cloud. Many of our ML projects that use GPUs run on-premises CI pipelines with internal servers that have GPUs. Other cloud-based CI services also continue to grow (e.g., Circle CI and Travis). Another on-premises option that would still work well for Python would be Jenkins.

As mentioned previously, security checks for Python are important and bandit continues to be a strong choice for checking for common issues. More recently, Soroco has begun to leverage Snyk due to their tracking of known vulnerabilities with open-source packages. With such a rich ecosystem of third-party libraries available in Python that our developers use, this has been a growing need to enforce.

Securing Soroco’s intellectual property when shipping Python code was another security concern of ours. Though compiling the code would obfuscate it, parts of the code could be easily swapped out and decompiled easily. Because securing Python code in itself is a major challenge, we built and open sourced a package for encrypting Python packages where the loader is modified to verify and decrypt it on load. This work is available on Soroco’s GitHub under our PYCE project.

Packaging, Dependencies, and Hosting

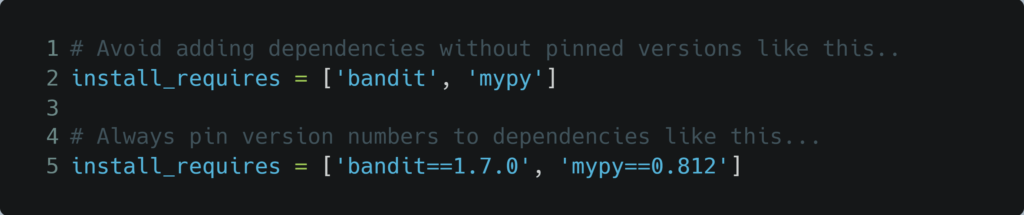

Managing Python-built systems at scale is improved significantly by ensuring that projects are properly Python packages and dependencies across them are maintained and modeled. While it may be tempting to ‘move fast’ and add dependencies without version pinning, adding dependencies without versions just like in any other language can be extremely dangerous. When packaging and deploying without pinning versions, the exact package can vary by environment and will lead to different behavior on different systems. Even more dangerous, without pinning we have even found packages change their software license and can risk pulling an undesirable license into the code.

With a large enough product built on Python with multiple repositories and packages, you will want to host them in a private package repository that is reachable by your deployment pipelines. Historically, it was common to host a basic pypi server. Now, many secure cloud-based solutions are available. For example, Azure Artifacts provide private repository support with significant tooling, security, and maintenance functionality built into it. Another popular solution would be Artifactory. If you still prefer to host your packages on-premises we suggest looking at Sonatype’s Nexus which can mirror pypi and host your private packages. Nexus also has strong support for hosting container images as well. Overall, we would suggest using a cloud-based service if possible which will simplify some of your continuous deployment. However, making on-premises systems accessible via the Internet will also work well if secured properly.

When packaging your Python-based projects, be sure to properly use tags when releasing and configure your build pipelines to read tags to take additional automated steps on release By checking for tags on build, you can easily automate connecting a tag to a release process into your private package repository.

Performance and Scalable Processing in Python

Up until now, we have focused primarily on the development and release cycle. As final guidance in this blog post, we will share what we have learned about performance and scalable processing in Python. In particular, how we carefully select libraries and how we use them to be able to process billions of transactions and data points across our automation and process discovery products.

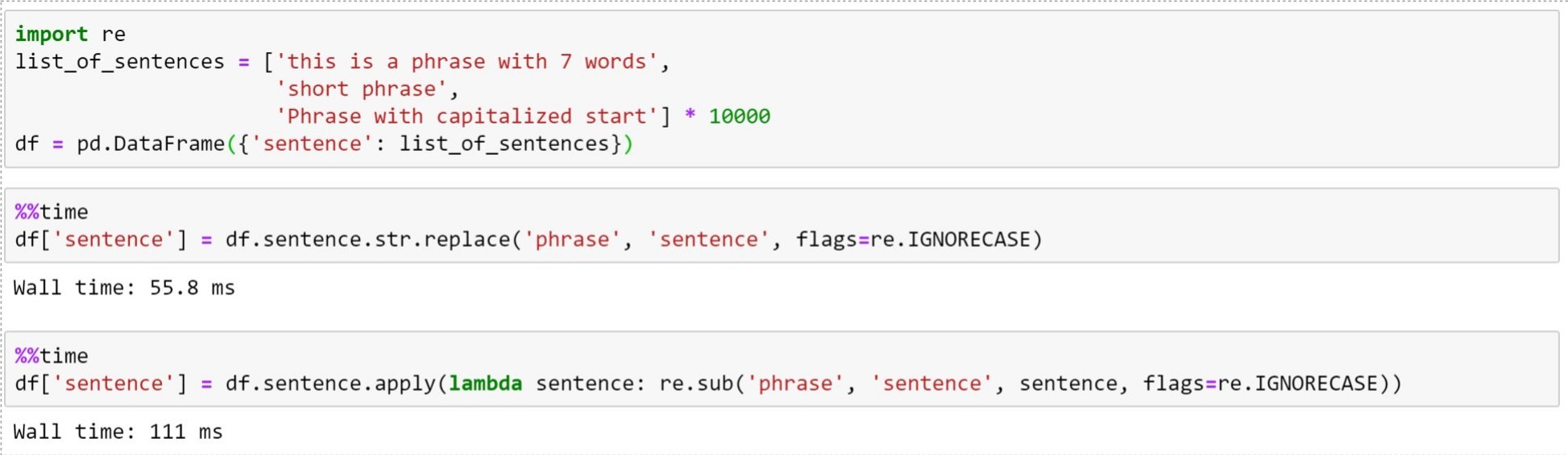

The most important part is benchmarking. There are many ways to benchmark Python code. In particular, pytest-benchmark, cProfile, and pycallgraph. However, something as simple as using %%time in a Jupyter Notebook and comparing the use of different calls is simple and powerful. A significant portion of Soroco’s code for the purpose of data analysis is first built in Juypter notebooks with data sets where we prototype and benchmark the code.

For data manipulation and data analysis, Pandas is widely used in Soroco and extremely efficient at large data manipulation. This is because of the efficient libraries it uses (e.g., numpy), which have many functions that are performance sensitive written in C. If these libraries are used properly, you will find that the performance you get even with heavy IO operations (where many people “think” Python is slow) will actually be quite fast. The following guide is great on IO operations and Pandas.

One area that I consistently find myself guiding our engineering team in code reviews or when collaborating on large data projects, is that simply using these libraries for periodic operations is not going to get the performance we may need. For example, getting Pandas to construct a DataFrame and then creating a Python loop that iterates over the rows will cause orders of magnitude of additional time as opposed to properly vectorizing and performing your operations. A rule of thumb that I follow is to never manually loop over any rows or columns in DataFrames. The following blog post (credit to the author, Sofia) has a great example on the speed differences. Without getting into depth of the actual operations, the author’s results show the massive performance differences between looping to perform operations vs. properly vectorized operations.

| Methodology | Average Single Run Time | Marginal Performance Improvement |

|---|---|---|

| Crude Looping | 645 ms | |

| Looping with iterrow() | 166 ms | 3.9x |

| Looping with apply() | 90.6 ms | 1.8x |

| Vectorization with Pandas series | 1.62 ms | 55.9x |

| Vectorization with NumPy arrays | 0.37 ms | 4.4x |

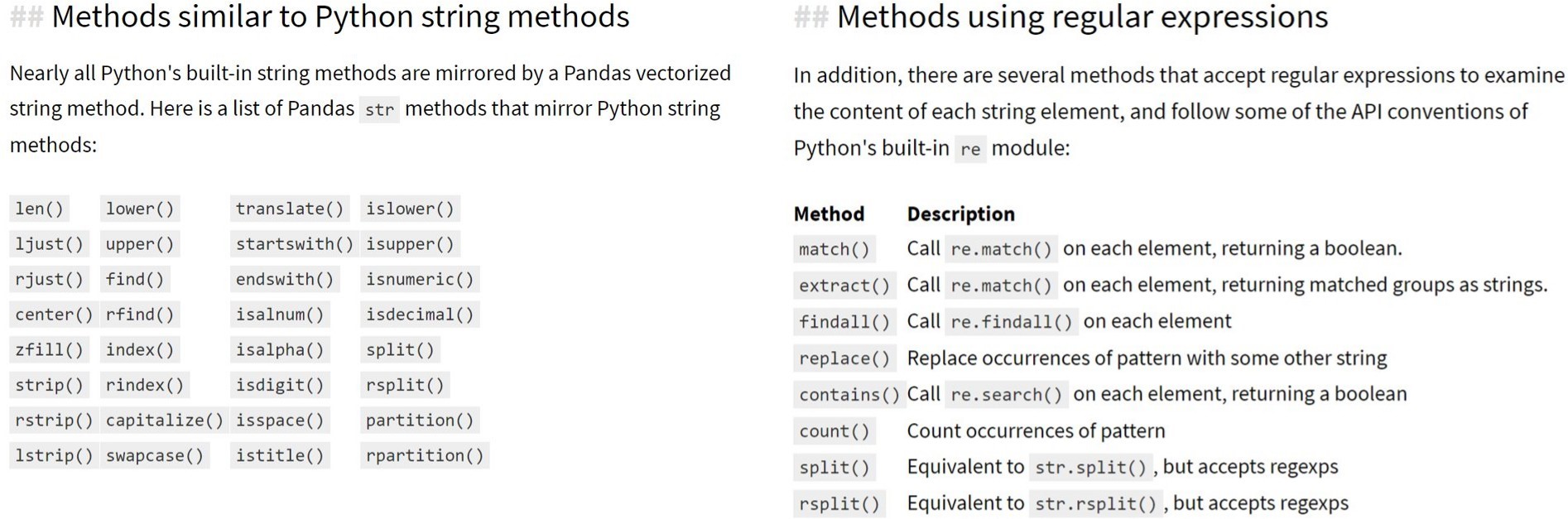

Another common set of operations in Python when doing data analysis and processing are string operations. Again, it is important to use built in methods to the Pandas framework instead of standard Python calls. Doing so will have orders of magnitude of difference. Another great reference is the Python Data Science Handbook chapter on working with strings. As the author has written:

Nearly all Python’s built-in string methods are mirrored by a Pandas vectorized string method. Here is a list of Pandas str methods that mirror Python string methods:

The libraries you should always check for fast implementations of algorithms or functions would be: Pandas, numpy, scikit-learn, Spark mllib, and scipy. Outside the scope of this blog post would be Soroco’s use of more tensor-based libraries like TensorFlow and PyTorch, like when and where we use them.

Conclusions

There are many different things you should consider when picking a predominant programming language. Throughout this blog post we shared various dimensions that are important to building large scale products with Python. Everything from development to performance. Building large systems with Python is very doable today. Though there have been challenges throughout the past, this blog post has shown ways to adopt optional parts of the language (e.g., PEP484) to ensure better development. The language itself and the tolling around it continues to rapidly improve. Finally, though Soroco is predominantly building its systems in Python, Soroco still has portions of its product built in Golang and C++ as well. Ultimately, do what is best for the product but always keep in mind development and maintenance. Make it easy to develop, deploy, and maintain.

If you enjoy reading this article and want to work on similar problems, apply here and come work with us!

Content Explorer

See Scout in action.

Schedule your demo now!